100 Days of Gen AI: Day 10

Today I started on a chatbot that responds as Socrates. I started a repo here: https://github.com/cmonaghan/chatbot-socrates

While I’m sure there are Socrates chatbots already out there, I want to train the model myself on a custom dataset. I found open-source text versions of Plato’s Socratic Dialogues, though at 317k lines of unstructured text, it would take a fair bit of data cleaning before this could be used to train a model.

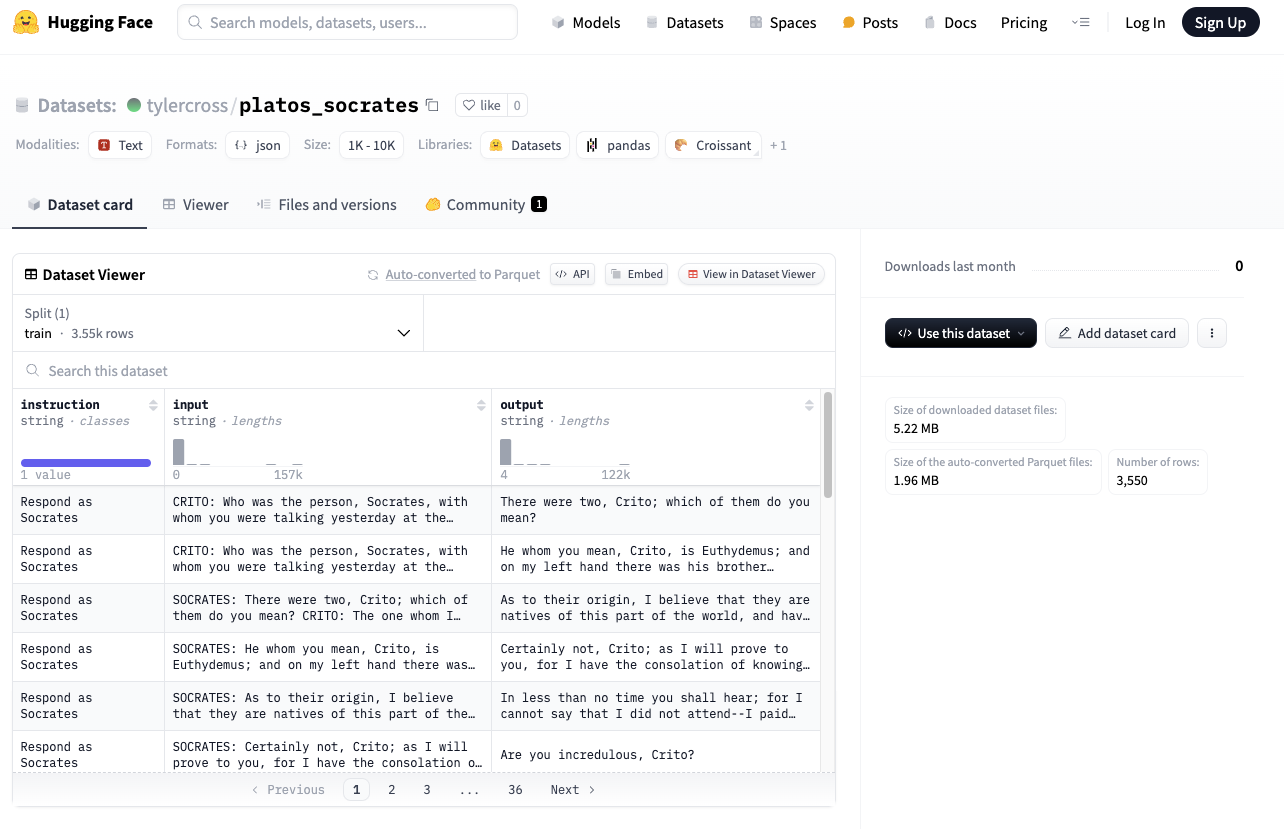

I started familiarizing myself with some of the core python libraries like transformers and existing Hugging Face datasets where I found a few existing datasets on Socratic dialogues such as this one:

I got a simple dev environment setup where I can run the GPT-2 model locally:

from transformers import GPT2LMHeadModel, GPT2Tokenizer

# Load pre-trained model and tokenizer

model_name = "gpt2" # You can start with GPT-2 and later switch to GPT-3/4

model = GPT2LMHeadModel.from_pretrained(model_name)

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

def generate_response(prompt):

inputs = tokenizer.encode(prompt, return_tensors="pt")

outputs = model.generate(inputs, max_length=150, num_return_sequences=1, no_repeat_ngram_size=2,

top_p=0.95, top_k=60)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

return response

# Test the function with a philosophical question

user_input = "What is the meaning of life?"

print(generate_response(user_input))

When running this, I got three answers, none of which are very good, but it’s a start!