100 Days of Gen AI: Day 11

I started training my own model today! I started with GPT-2 then used this existing dataset of Plato’s Socratic Dialogues to train. It’s a relatively small dataset at only 3550 rows. I’m not sure how quality this dataset is, or if it will meaningfully improve responses, but it seems worth a try (and much faster than trying to clean and prepare my own dataset).

Before I started training, I played around with the existing GPT-2 model to see how it performs. It’s not that intelligible:

To the question:

what is a life well-lived?”

It responded:

If it’s not life, who can tell? Why does life exist at all? A certain way: when the world is cold, you keep running to where you want it to be or keep moving on. Your energy goes to your home, not to the trees or to other places. If you run to get to a work place and get the best quality of life out of that space, the universe is around you and has the power to create you. You go on vacation in your room; you go to dinner with your family; and your work and friends live off the rest of the time. What is life? It may be an exciting new invention that happens on

Let’s make this model smarter!

I used ChatGPT to help me setup the necessary training code. It took a few iterations to fix various mistakes in the ChatGPT recommendations and to adapt it to my dataset, but working with ChatGPT really makes my debugging cycles are SO much faster.

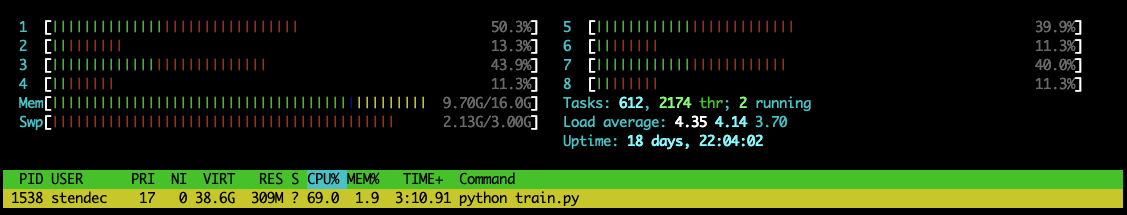

Once I had everything ready, I ran python train.py. Immediately I could hear my laptop fan start whirring. You can see htop shows some hefty work being done on my macbook.

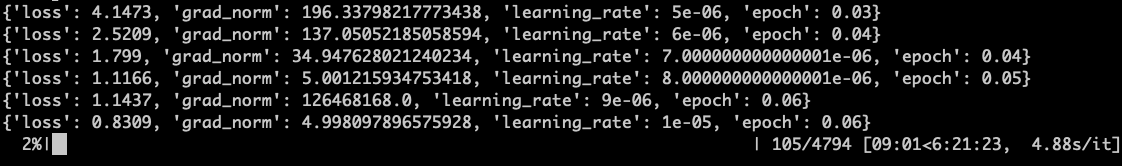

From the training log output, it looks like I’ll need to settle in and just let this run for a few hours.

Exciting day!